How Notion MCP Crushed My Dreams

I really thought I had discovered a rapid new way to magically enrich my ad hoc workflows.

The promise is simple enough: Give Claude access to my Notion via MCP. At any time in a Claude chat, I could ask the bot to read from or even update a Notion database. This is pretty amazing: you now have a chatbot (of your choice, really) that can directly integrate with a persistence layer. This unlocks a lot of power for a lot of people that might otherwise struggle to maintain a mental model of db tables and entity relationships - let alone build an integration.

Sometime in the late 90s or early 2000s, my Aunt Ruth Ann presciently gathered all of our family’s recipes that she could and compiled them into a true family heirloom: an ms.doc file.

Now, let’s say, one of my cousins wants to make that doc easier to use. As an early adopter of AI-tools, she drops it into Claude and now she can chat with it. Later, she even creates a Custom GPT and shares it so that other family members can chat up some dinner ideas.

But sometimes you just want to browse the recipe book.

So, for our story, we’ll imagine my cousin has been fiddling with Notion awhile. She’s used it to organize a few trips, but isn’t an expert. She gets the idea of how filtering and sorting works in Excel - not a database expert, for sure, but familiar enough to wish the cookbook doc were a Notion database. Maybe for meal planning or maybe for appending additional tips and tricks.

My cousin is not going to create a Notion database schema and then copy and paste the recipes one by one. Instead, she might say,

Claude, take this word document with all our family recipes and make a Notion database that is a recipe box.

You now have a recipe box webpage you can share with family via URL. In one instruction.

My cousin could continue on to chat her way to a dinner calendar, a shopping list, or methods guide for training young cooks, all stored in Notion where it’s easy to share, leverage, and evolve as needed on the fly to fit your needs (to a point, I’m sure).

But really regardless of your use case, instead of all your work being trapped in the Claude conversation thread, you can now easily post to an external persistence layer with a simple instruction (albeit after authorizing a connector).

A Poor Man’s Workflow Automation

My research interests include both design methods and tool use. In particular, I’m curious about how designers are combining tools in their workflows and how these workflows are evolving.

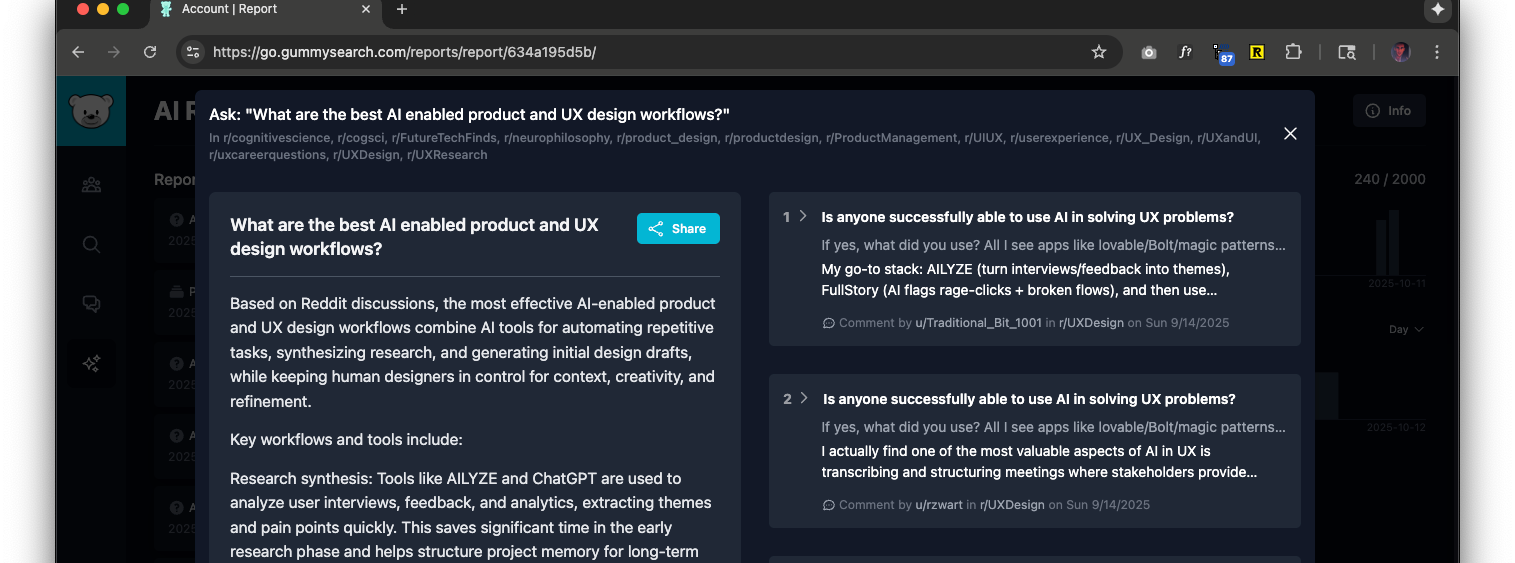

One of my research pipelines starts with a step using GummySearch:

Ask the question of Reddit.

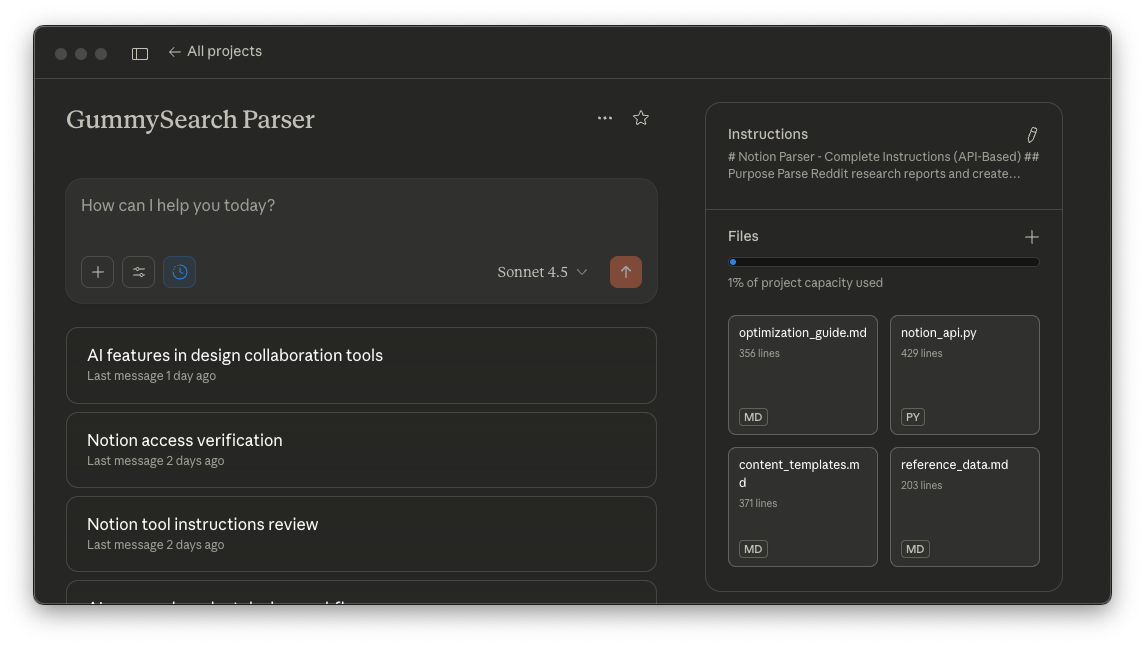

The above input is analyzed for tools and workflow patterns (e.g. in this Claude Project with instructions).

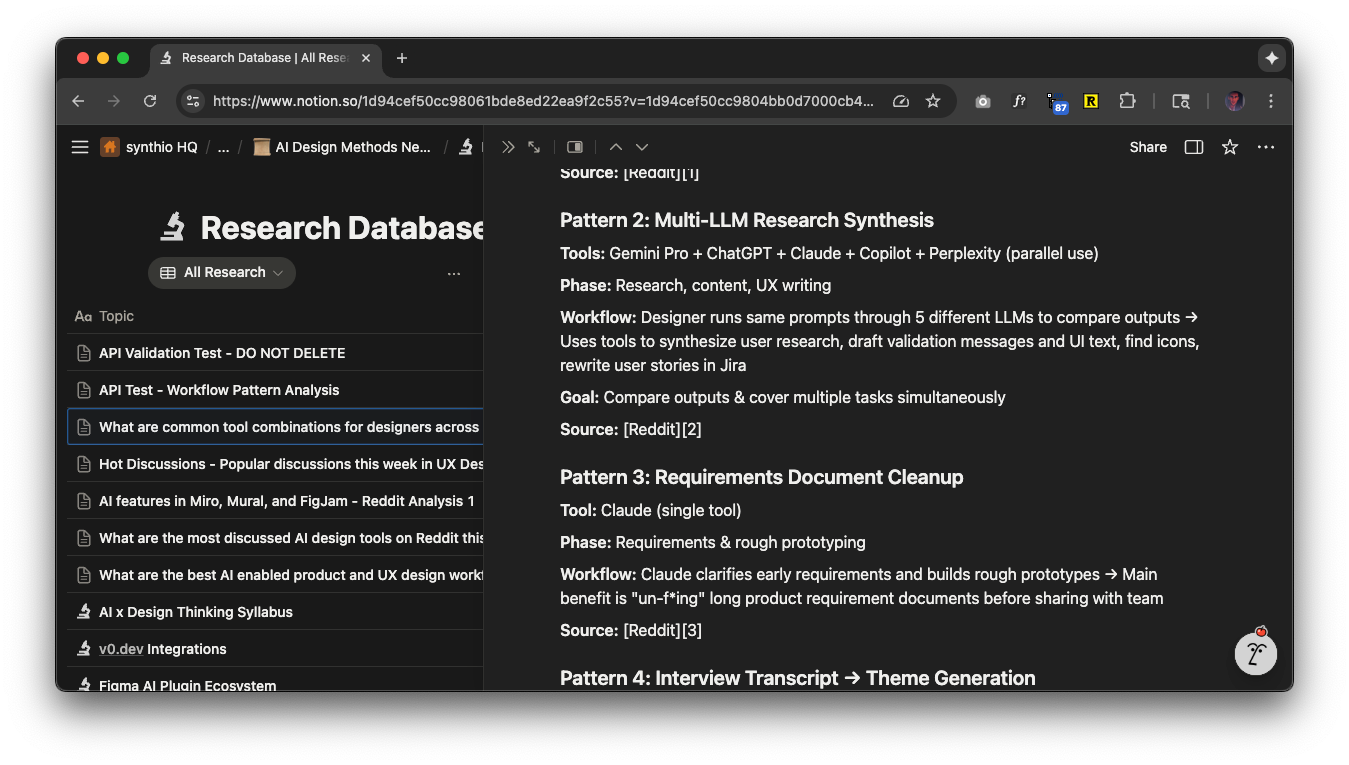

So that a formatted report is produced and stored in Notion.

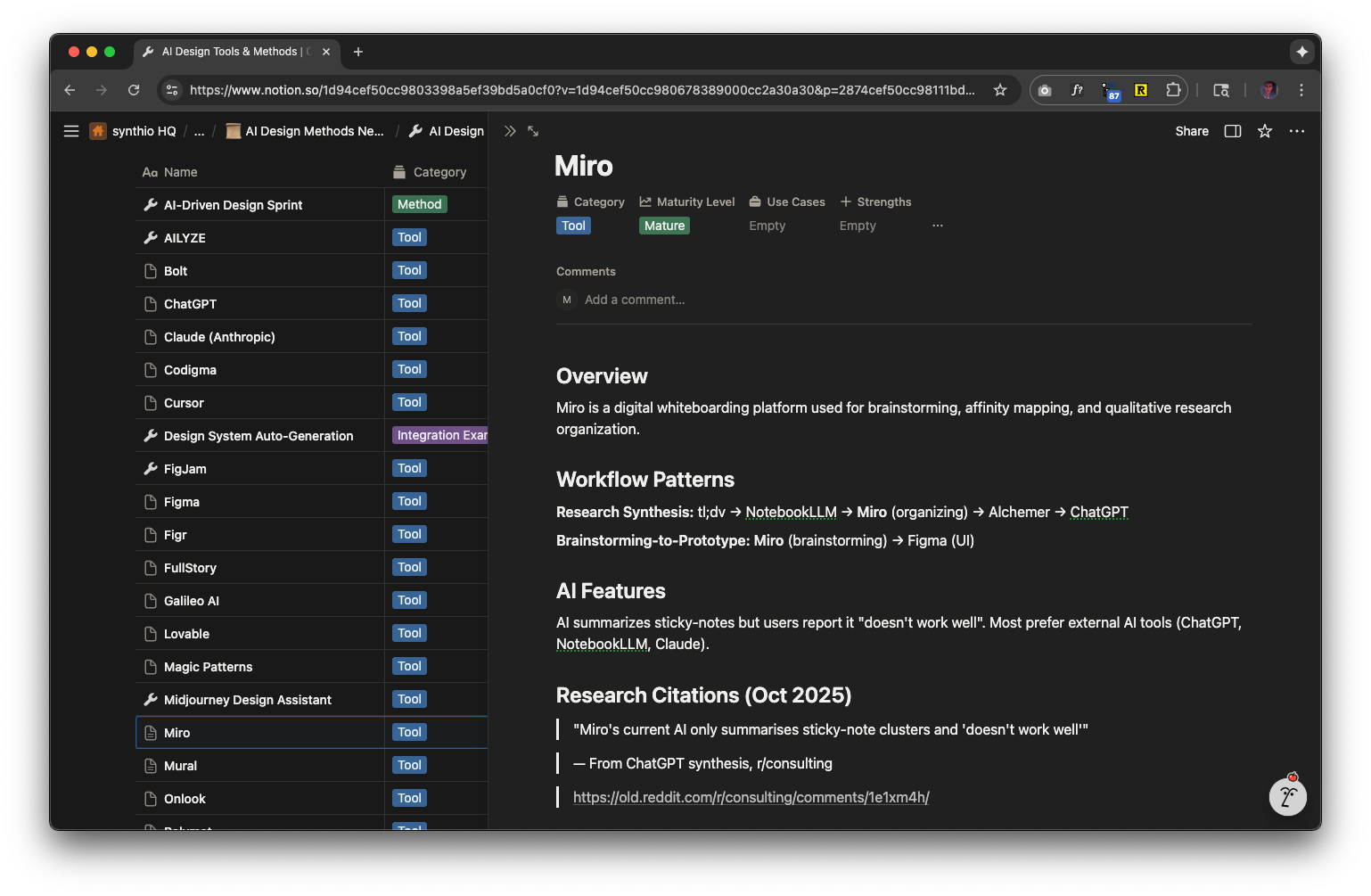

And specific Tools are updated to include additional workflow insights

The beauty of this approach for me:

The persistence layer integration is entirely plain language in the project instructions.

I didn’t have to build an integration - I asked Claude to look for the couple of databases I already have - as context for building the project instructions.

Easy to make, easier to use.

I drop in a report in a new chat within the Claude project and about a minute later it is a new entry in a database and has appended any existing Tool records.

Easy to update.

Discuss and deploy minor Notion db schema updates, select values, and then update the project instructions. (I did eventually move over to Claude Code to manage the project instructions after I broke them out into different knowledge documents.)

It was also quite easy to debug/improve performance - Besides the MCP server failures, Claude recovered from 100% of errors from Notion when processing a report. A few turns of reflective improvement to instructions went a long way - except for when Notion’s MCP server returned an ever-changing but always ambiguous error code on any number of seemingly random operations.

How did Notion MCP break my heart?

It’s frustratingly unreliable. At least it was on Friday. And I’m not interested in debugging.

An aside on how good Claude Code is:

Excited by the rapid progress, but frustrated by the MCP unreliability - I used Claude Code to write and test an API-based fallback - leveraging Claude’s new code execution sandbox with network access. It worked, amazingly, but because the API instructions were in the prompt (project instructions), Claude would write the actually executed persistence-layer python code during inference time, which was prone to an occasional error (besides seeming extraordinarily silly as an approach). I did try to “imagine” though, to use Anthropic’s own term for it. Instead of optimizing this approach any more, I’m sticking to my more stable research automations for now and hopeful the Notion MCP service quality improves with time.

Nice! What in particular was unreliable about the MCP? I haven’t used it