Conversation Design Is Your New UX Core Competency

Product Designers: AI is no longer confined to a chat window. Elements of conversation are now embedded throughout the UX.

Your personalized daily brief at login? That’s AI starting a conversation by deciding what to tell you. An autofilled database field? That’s AI suggesting something and waiting for your response. An approval queue? That’s AI asking “should I do this?”

The entire interface has become conversational, even when there’s no chat bubble in sight.

We’re designing conversations that happen across multiple surfaces, over time, with non-deterministic responses. And each kind of conversation creates its own uncertainty for users.

In fall 2025, I’m seeing AI in SaaS products do four distinct jobs, each with its own conversational pattern and uncertainty profile.

The four jobs AI does

The four jobs cover the gambit from proactive to reactive to autonomous:

Inform: Proactively surface information (daily digests, briefs, summaries)

Suggest: Offer embedded assistance (autocomplete, recommendations, next-step guidance)

Collaborate: Partner in real-time (copilot, chat, pair programming)

Execute: Take autonomous action (agents, automation, task completion)

Each job creates different kinds of uncertainty for users. And each requires different design patterns to manage that uncertainty.

Job 1: Inform (AI decides what you need to know)

This is the one that is really interesting to me: AI takes initiative to surface information without being asked.

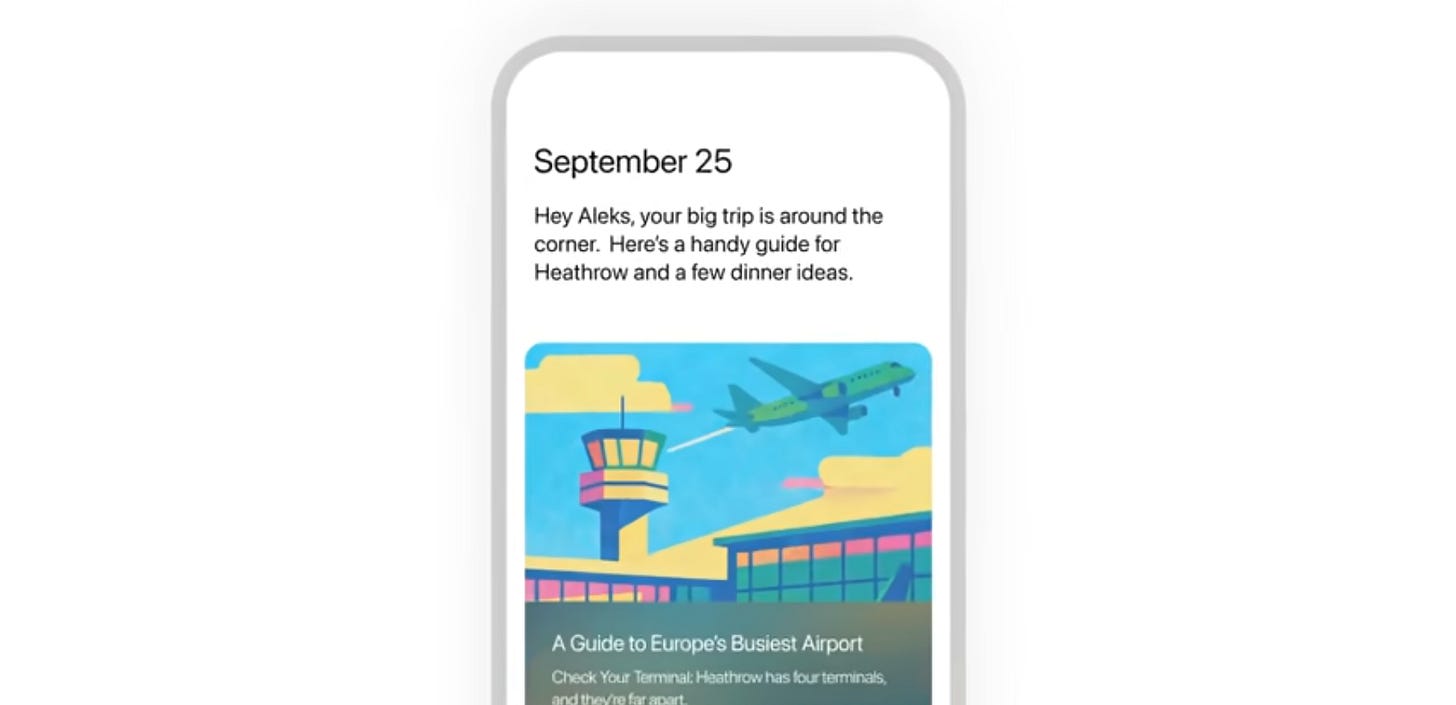

ChatGPT Pulse delivers a personalized daily briefing every morning. It runs asynchronous research overnight, synthesizes your chat history and connected apps (if you opt in), and presents a card-based feed of what it thinks you need to know today. You didn’t ask for it. The AI decided to tell you.

Users perceive AI as a curator deciding what’s important and when to interrupt. This creates a specific kind of trust challenge: the AI is making judgment calls on your behalf before you’re even awake or logged in.

The uncertainty: What should be in the digest? When should it be delivered? What if the AI gets it wrong and surfaces noise instead of signal?

Managing this uncertainty: Build feedback loops that let users signal what’s relevant. Thumbs up/down, dismiss actions, engagement metrics—these teach the AI what each person cares about. The digest gets better over time, not perfect from the start.

Job 2: Suggest (AI offers options, you decide)

This is embedded assistance. The AI makes suggestions in the flow of your work, and you accept, reject, or ignore them.

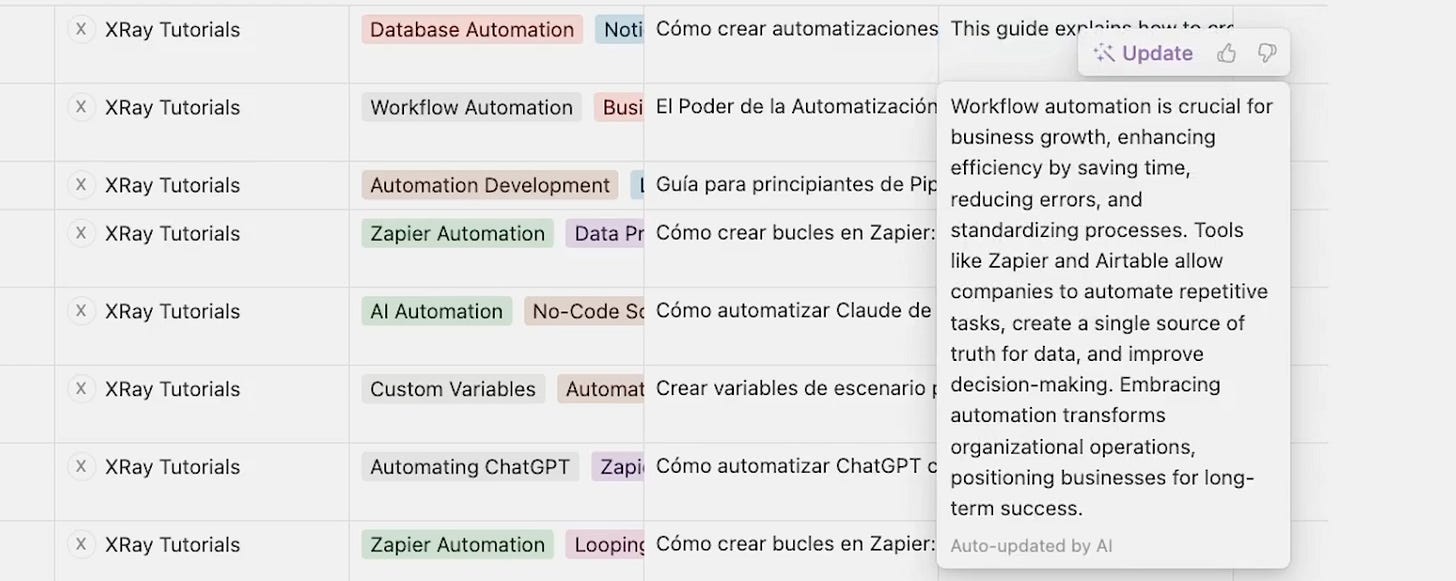

Notion’s Database quietly fills in database fields when it can, and users can accept, reject, or ignore the suggestions. No chat interface. No “AI mode” to enter. Just helpful suggestions that appear when relevant.

Users perceive the AI as a precision assistant—offering micro-level support that’s easy to accept or ignore.

The uncertainty: Is this suggestion useful? Trustworthy? How is it influencing my own sensemaking? Is it engaging my critical thinking process or doing the work I don’t care to remember?

Managing this uncertainty: Make suggestions easy to override. The default should be ‘ignore and keep working.’ Accepting a suggestion should feel like a shortcut, not a commitment. When users can dismiss without friction, they’ll trust the AI to keep trying.”

Job 3: Collaborate (AI as thinking partner)

This is real-time partnership. You and the AI work together, iterating toward a solution.

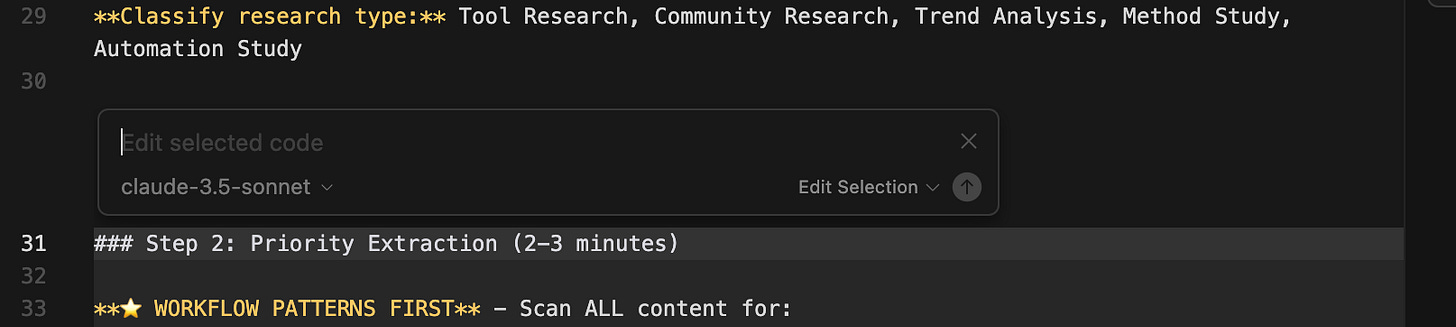

Cursor’s chat mode (Cmd+K) is collaborative. You describe what you want, the AI proposes changes, you review and accept or refine. It’s a conversation, not a command.

The AI isn’t just executing—it’s thinking with you.

Users perceive the AI as a thinking partner—working alongside them to refine ideas and solve problems. This requires the AI to maintain context across multiple turns and show its reasoning, so users can judge whether they’re on the right track together.

The uncertainty: Is the AI understanding what I actually want? Do I understand what the AI is capable of? How do I make sense of a long-running conversation as it expands? How do I know when we’re done?

Managing this uncertainty: Make the conversation iterative—let users refine, redirect, and build on previous responses. Show the AI’s reasoning so users can judge whether they’re on the right track. Provide undo/redo so users can backtrack without starting over.

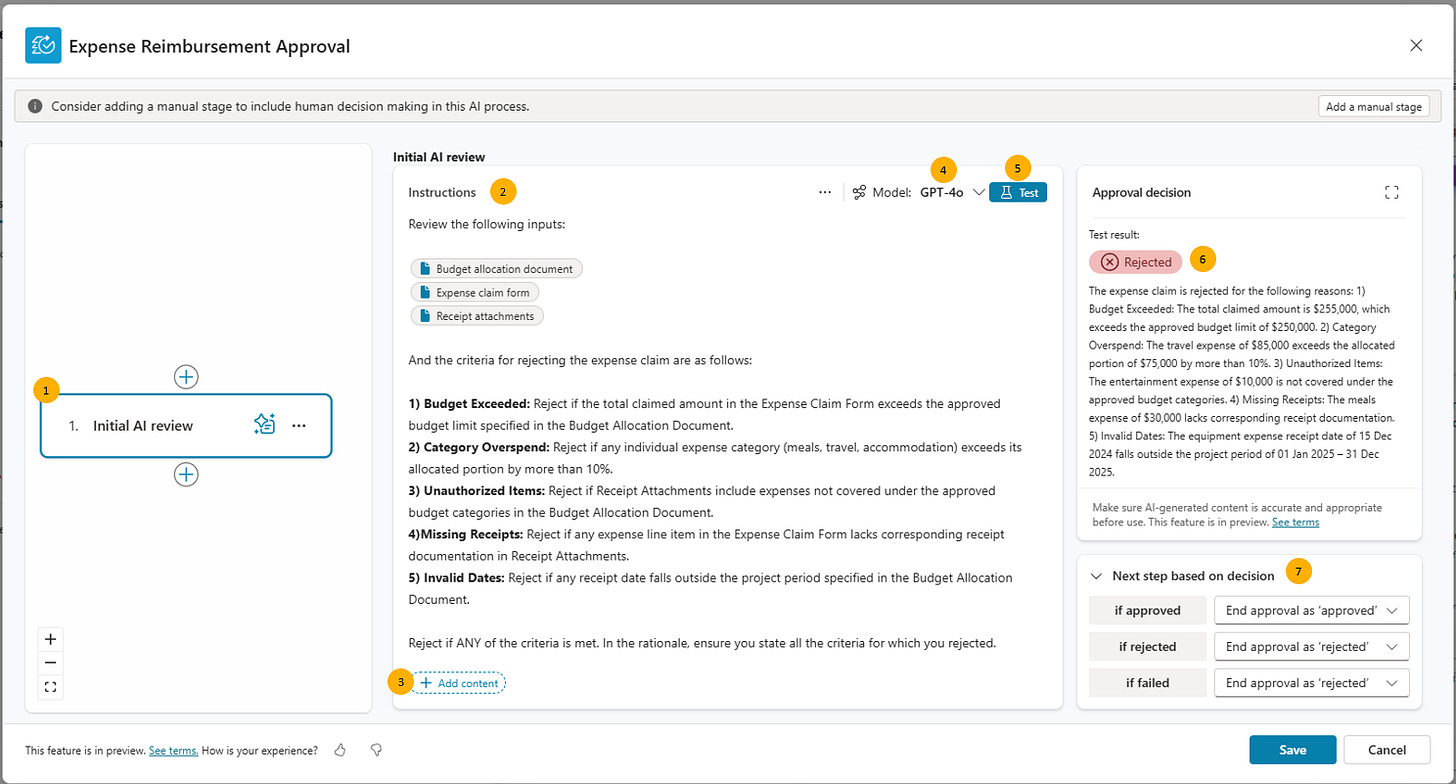

Job 4: Execute (AI takes action autonomously)

This is where AI does work on your behalf, often without real-time supervision and I’m mostly seeing it enterprise software but emerging in consumer product realms. This isn’t copilot mode (where you’re working together in real-time). This is asynchronous execution. The agent does the work, then asks: “Should I do this?”

Users perceive the AI as a coworker—capable of taking action but requiring oversight. This is why approval queues, contextual action history, and performance visibility are essential. The AI is doing real work that has consequences, so users need mechanisms to verify, approve, and learn from what it does.

The uncertainty: Did the AI do the right thing? How do I know what it did? What if it made a mistake I don’t catch? Are approving AI actions just as burdensome as doing it yourself?

Managing this uncertainty: Design approval queues with clear handoff patterns—the agent works, then surfaces its work for human review. Show contextual action history so users can see what the AI did to this specific account or object. Make the approval process teach the system what good judgment looks like.

Designing the transitions between jobs

Here’s where it gets complex. In enterprise products, AI often does all four jobs simultaneously. I’m working on agents that execute (handle tenant communications), inform (daily brief at login), suggest (next-step recommendations), and collaborate (chat with agents for complex cases).

When AI is doing all four jobs continuously across an organization, you need a different kind of interface: agent management.

Agent management isn’t a fifth job—it’s the oversight layer for AI that’s doing all four jobs at scale. The design challenge isn’t “how does one user interact with one AI” but “how does a team manage dozens of AI agents working across hundreds of business objects simultaneously.”

When you’re designing for enterprise-grade SaaS products, you will need to weave together a user’s flow through these modalities across the emerging landscape of interaction and UI patterns. A daily brief might direct you to an approval or task hand-off queue where you then might collaborate on an analysis in order to select the next action, which was, alas, AI suggested.

Are those transitions smooth? Or do users feel like they’re switching between separate products? Does the relevant context follow the user across modes? Are the UI patterns coherent and fit for purpose?

If your transitions are clunky, or your UX metaphors confusing, users will struggle to make sense of the chaos you’ve created.

What matters now for a competitive design strategy

Here’s what matters most:

Establish and iterate on AI-UX standards, frameworks and metaphors that are fit for purpose and modality. Prioritize clarity and familiar patterns—users are learning AI interaction models across products. But don’t let existing conventions prevent you from exploring new approaches when they better serve your users’ goals.

Understand which mode you’re working on. Conceptualize your design ideas within a user flow that helps you understand the transitions. Test the coherence of the mental models you’re asserting with real users early and often.

Transition design work to high-data-fidelity prototypes as early as possible - ones that can simulate real world user and AI agent activity against real world data sources.

Organize around rapid iteration using real world usage data. Transcripts, session recordings, and click-path telemetry will inform iterative enhancements while qualitative interviews can help the team shape bigger bets.

We’re in fall 2025, and I’m still figuring this out with my team. We’re building the routines to observe, iterate, and improve across all four jobs. It’s messy. The patterns are emerging, not settled.

Designing for uncertainty isn’t about eliminating it. It’s about making it visible, manageable, and transparent. And then using what you observe to get better.

References

Microsoft Design. (2024). “UX Design for Agents.” Foundational principles including appropriate trust and uncertainty as a design element. https://microsoft.design/articles/ux-design-for-agents/

Notion. (2024). Database Autofill feature launch. Product updates documenting 50%+ AI attach rate and community response.

OpenAI. (2025). “Introducing ChatGPT Pulse.” Proactive daily briefing feature announcement. September 2025.

Sharma, S. (2025). “Where Should AI Sit in Your UI? Mapping Emerging AI UI Patterns and How Spatial UI Choices Shape AI Experiences.” UX Collective. Analysis of seven UI layouts and how spatial placement influences perceived AI roles. https://uxdesign.cc/where-should-ai-sit-in-your-ui-1710a258390e

Slack Design. (2025). “Prioritizing Craft in Recap, Our Newest AI-Powered Feature.” Design process for daily digest feature. https://slack.design/articles/prioritizing-craft-in-recap-our-newest-ai-powered-feature/

Skywork AI. (2025). “What is ChatGPT Pulse? OpenAI’s Proactive AI Daily Briefing.” Analysis of proactive information delivery patterns. https://skywork.ai/blog/chatgpt-pulse-openai-ai-daily-briefing/